How to Spot—and Fix—Biased Questions in Your Surveys

Stop making bad decisions with bad data. This guide exposes the most common biased questions in surveys and gives you frameworks to fix them, fast.

Posted by

Related reading

Stop Asking Useless Survey Questions. Seriously.

Stop guessing. Here are 8 battle-tested ordinal survey question examples you can steal to get real customer insights instead of useless data.

Your Customer Feedback Is Useless. Here's Why.

Stop letting biased survey questions kill your startup. Learn to identify and fix common survey mistakes for brutally honest and actionable customer feedback.

Your Feedback Surveys Are Lying To You. Here’s How to Fix It.

Stop guessing. Here are 10 battle-tested feedback survey questions that get real answers. Cut through the noise and find out what your customers truly think.

Biased questions contaminate your data. They give you the answers you want to hear, not the ones you need. Here's how to spot the 7 worst offenders and get feedback you can actually trust.

Here’s the TL;DR in 20 Seconds:

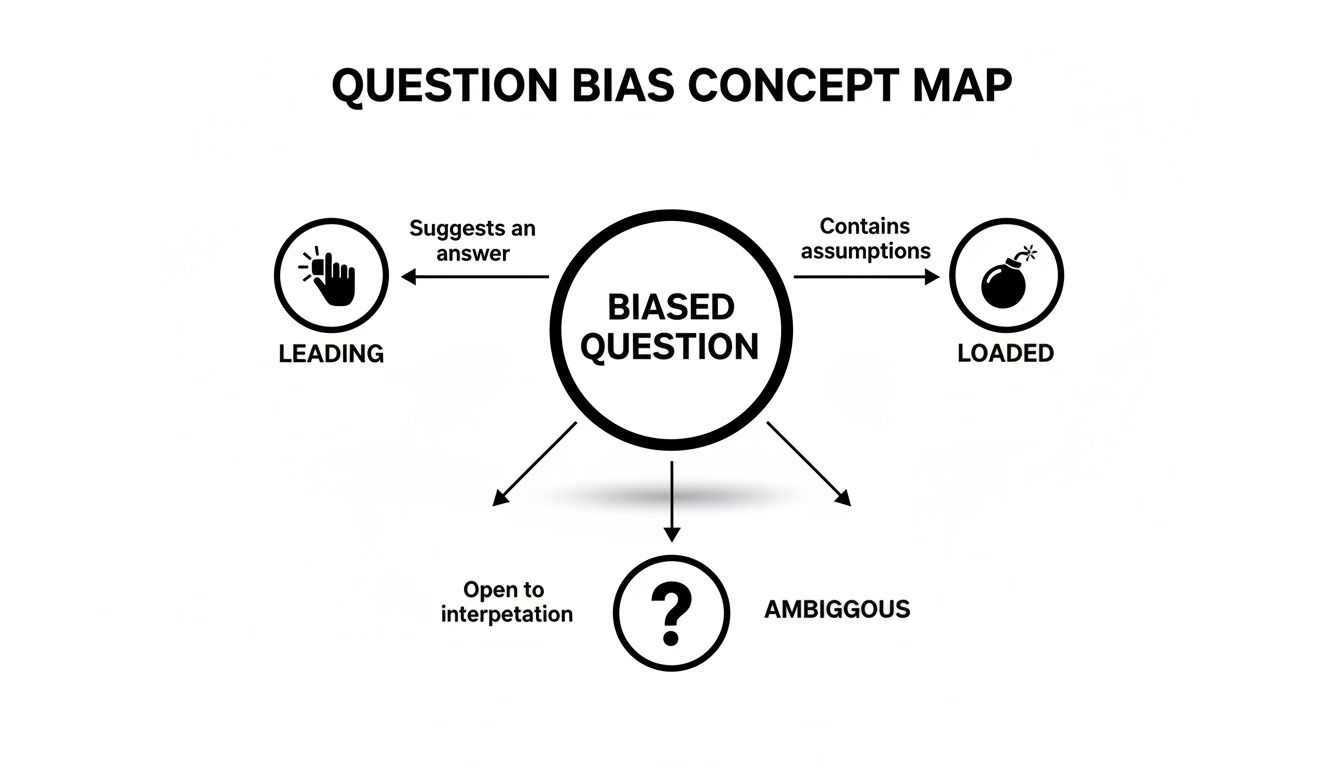

- Leading Questions nudge people toward your preferred answer. Fix: Use neutral language.

- Loaded Questions use assumptions or emotional words. Fix: Strip out the opinions.

- Double-Barreled Questions ask two things at once. Fix: Split them into two separate questions.

- Absolute Questions use words like "always" or "never." Fix: Use a frequency scale instead.

- Ambiguous Questions are vague and open to interpretation. Fix: Be painfully specific.

- Social Desirability makes people give "good" answers, not honest ones. Fix: Ask about actual behavior, not ideals.

- Your Real Problem is often sampling bias (asking the wrong people) or non-response bias (only hearing from the extremes).

The 7 Types of Biased Questions Wrecking Your Data

Most survey bias isn't some complex, hidden force. It usually comes down to a few predictable traps. Once you learn to spot these patterns, you won't be able to unsee them. Let's walk through the seven most common offenders that are quietly poisoning your data and pushing you to build the wrong things.

1. Leading Questions

Leading questions are the survey equivalent of a lawyer leading a witness. They subtly nudge respondents toward a particular answer, often the one you’re hoping to hear.

- Real Example: "How much did you enjoy our intuitive new dashboard?" This question assumes the user enjoyed it and labels it "intuitive" before they even answer.

- The Fix: "How would you rate the usability of our new dashboard?" This version is neutral. It asks for a rating on a specific attribute without pre-loading the answer.

Actionable Takeaway: Kill all positive or negative adjectives in your questions. If it injects an opinion, cut it.

2. Loaded Questions

If leading questions are a gentle nudge, loaded questions are a hard shove. They contain a hidden, often controversial assumption or use emotionally charged language. Answering forces the respondent to implicitly agree with whatever premise you’ve baked in.

- Real Example: "Do you support our plan to improve our service by investing in our amazing support team?" The words "improve" and "amazing" corner the respondent into agreeing.

- The Fix: "To what extent do you support or oppose investing more resources into our customer support team?" It's neutral and allows for a full spectrum of opinion.

Actionable Takeaway: Assume every respondent is a skeptic. Write questions that a cynic could answer without rolling their eyes.

3. Double-Barreled Questions

This is the most common rookie mistake. A double-barreled question tries to ask about two different things at once but only gives you room for a single answer. The data is useless because you don’t know which part the person was answering.

- Real Example: "Was our onboarding process quick and easy to understand?" A process can be quick but confusing, or clear but painfully slow.

- The Fix:

- "How would you rate the speed of our onboarding process?"

- "How would you rate the clarity of our onboarding process?"

Actionable Takeaway: If a question contains the word "and," it's probably double-barreled. Split it. We dive deeper into how to structure questions in our guide on how to write open-ended questions.

4. Absolute Questions

These questions use definitive, all-or-nothing words like "always," "never," "all," or "every." They force respondents into a corner because reality is rarely that black and white.

- Real Example: "Do you always check your email first thing in the morning?" Very few people do anything "always." The question encourages a dishonest "no."

- The Fix: "How often do you check your email within the first hour of your day?" (Options: Daily, A few times a week, Rarely, Never)

Actionable Takeaway: Replace absolutes with frequency scales. You'll get a much more accurate picture of real-world habits.

5. Ambiguous Questions

Ambiguous questions are full of vague terms, jargon, or unclear language that different people interpret in different ways. If your respondents aren't sure what you're asking, you're not getting answers to one question—you're getting answers to a dozen different questions you never intended.

- Real Example: "Is our app's performance satisfactory?" "Performance" and "satisfactory" are totally subjective. One person’s "satisfactory" is another's "unbearably slow."

- The Fix: "How would you rate the loading speed of our app's main dashboard?" Specific. Measurable. Unambiguous.

Actionable Takeaway: Replace vague words with specific metrics. Instead of "good," ask about "speed." Instead of "effective," ask about "accuracy."

6. Acquiescence and Social Desirability Bias

These two are about the respondent's psychology, but your questions can trigger them.

- Acquiescence Bias (Yea-Saying): People's tendency to agree with statements to be polite or finish a survey quickly.

- Social Desirability Bias: People answer in a way that makes them look good, not in a way that’s true.

This effect alone can inflate positive responses by a staggering 20-30%. You can read more about how cultural norms impact survey data on Sawtooth Software's blog.

- Real Example: "Do you agree that recycling is important for protecting the environment?" This question invites both yea-saying and social desirability bias—who would say no?

- The Fix: "In the past month, how often have you recycled plastic containers?" This focuses on actual behavior, not abstract ideals.

Actionable Takeaway: Ask about past actions, not future intentions or abstract beliefs. Behavior tells the truth.

A Quick Cheatsheet: Spotting and Fixing Biased Questions

| Bias Type | Biased Question Example (Bad) | Unbiased Rewrite (Good) |

|---|---|---|

| Leading | "How much did you enjoy our intuitive new dashboard?" | "How would you rate the usability of our new dashboard?" |

| Loaded | "Where do you enjoy drinking a refreshing beer?" | "What types of beverages do you typically drink?" |

| Double-Barreled | "Was our onboarding process quick and easy to understand?" | "How would you rate the speed of our onboarding process?" |

| Absolute | "Do you always check your email first thing in the morning?" | "How often do you check your email in the first hour of your day?" |

| Ambiguous | "Is our app's performance satisfactory?" | "How would you rate the loading speed of our app's main dashboard?" |

| Social Desirability | "Do you agree recycling is important for the environment?" | "In the past month, how often have you recycled plastic containers?" |

The Hidden Costs of Ignoring Survey Bias

Think a little bias is harmless? It's not. Biased data is worse than no data. It gives you a false sense of confidence while you steer your business straight toward a cliff.

You build features nobody wants. You pour marketing dollars into the wrong message. The costs are tangible, and they are entirely avoidable. Biased questions create a dangerous echo chamber. They tell you what you want to hear, not what you need to know.

From Bad Data to Wasted Millions

A SaaS company was convinced they had a game-changing feature idea. They surveyed their power users with questions dripping with confirmation bias:

- "How excited are you about a powerful new feature that will streamline your workflow?"

- "Wouldn't it be great if you could manage all your tasks from one simple dashboard?"

The results? Overwhelmingly positive. Armed with this "validation," the company invested over a million dollars and six months of engineering time. The launch was a complete dud. The survey had manufactured an enthusiasm that didn't exist in the real world. They didn't just waste a million dollars; they wasted half a year of opportunity cost they can never get back.

The True Price of Inaccuracy

The damage goes way beyond one failed project. Biased feedback is a toxin that spreads through an organization, corrupting roadmaps and marketing strategies.

| Cost Type | Real-World Impact |

|---|---|

| Wasted Development Resources | Engineering hours and salaries burned on features that fail to get traction. |

| Misguided Marketing Spend | Ad budgets blown on campaigns promoting benefits that don't resonate. |

| Eroded Customer Trust | Users get frustrated when a company seems completely out of touch. |

| Inflated Churn Rates | Customers leave for competitors who seem to understand their problems better. |

| Decreased Team Morale | Engineers feel defeated after pouring effort into projects that ultimately flop. |

Actionable Takeaway: Start treating every survey question like a line of code. It needs to be clean, precise, and rigorously tested, because a biased question can cause more damage than a software glitch.

A Practical Framework to Detect and Eliminate Bias

Designing a great survey is a defensive game. You have to assume bias will try to sneak in. Hoping for the best won't get you clean data; you need a battle-tested process to hunt down and neutralize bias before it messes with your results. When building this framework, it's incredibly helpful to consult examples of a robust research methodology to guide your design from the start.

Stage 1: Pre-launch Peer Review

This is your cheapest and fastest line of defense. Before a survey ever sees a customer, it needs to see your colleagues. Get people from engineering, marketing, and support to take it. Their job is to be your toughest critics. They'll instantly spot jargon or flawed assumptions you were blind to.

- Real Example: A product manager writes, "How would you rate our new, streamlined checkout and payment process?" A support agent would immediately flag that. They know from countless customer tickets that "checkout" and "payment" are two separate hurdles. This 15-minute review catches 80% of the most glaring errors.

Actionable Takeaway: Never be the sole reviewer of your own survey. Make peer review a mandatory step.

Stage 2: Cognitive Interviewing

Once it passes the internal test, watch a few real users interact with it. This is cognitive interviewing. Recruit a small handful of people (five is often enough) and ask them to "think aloud" as they go through the questions.

You’ll hear things like: "Hmm, when you say 'active user,' does that mean I log in daily, or is weekly enough? I'm not sure what to pick." This process unearths hidden friction you could never have predicted. You can dive deeper into crafting clear questions in our guide on how to create a questionnaire.

Actionable Takeaway: Watch five users take your survey before you launch it widely. The insights you'll get from seeing them pause or get confused are worth more than a thousand responses from a flawed survey.

Stage 3: Pilot Testing and Weighting

Finally, run a small-scale pilot test with a representative slice of your audience (50-100 respondents). This is your final dress rehearsal. Look for high drop-off rates, weird response patterns, or unusual completion times.

This is also your last chance to address sampling bias. The World Bank ran into this during the pandemic with phone surveys across 80 countries. Their initial samples were heavily skewed toward wealthier households. To fix it, researchers applied survey weight adjustments to rebalance the sample, a move that drastically reduced the bias. Discover more about these crucial findings on the World Bank's Open Knowledge Repository.

Actionable Takeaway: Always run a pilot test and analyze those initial results before the main launch. Compare your pilot sample's demographics to your actual customer base and apply weighting if needed.

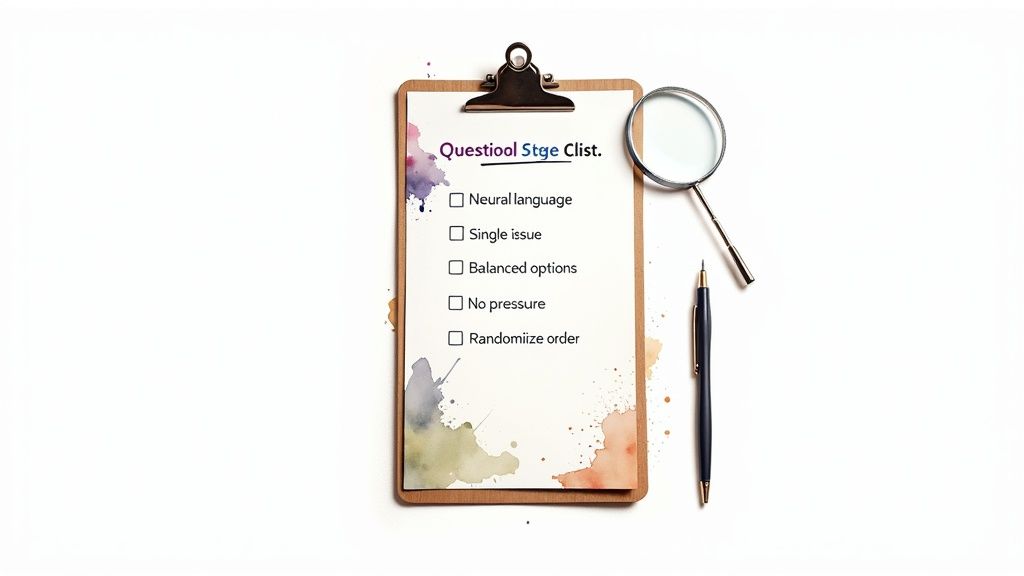

Your Pre-Launch Checklist for Unbiased Questions

You can't rely on gut feelings. You need a system. Before you hit "send," run every single question through this five-point checklist.

1. Is the Language Simple and Neutral?

Scan your questions for jargon or emotionally loaded words. Words like "innovative," "seamless," or "frustrating" steer the answer.

- Bad: "Did you find our groundbreaking AI feature intuitive?"

- Good: "How easy or difficult was it to use the AI feature?"

2. Does It Ask Only One Thing?

Hunt down the dreaded double-barreled question. Asking about "price and value" or "speed and reliability" in a single question renders the answer meaningless.

- Bad: "Please rate the speed and accuracy of our customer support."

- Good: Split it into two questions: one for speed, one for accuracy.

3. Are the Response Options Balanced and Complete?

An unbalanced scale is an easy way to poison your data. Make sure you have an equal number of positive and negative options, and a true neutral point like "Neither agree nor disagree." If you can't cover every likely scenario, add an "Other (please specify)" escape hatch.

4. Could This Question Pressure the Respondent?

Check for social desirability bias. Read the question and ask: "Could someone feel judged for answering this?" Questions about personal habits, income, or professional routines are classic triggers.

- Bad: "Do you agree that it's important to read industry news daily?" (This shames anyone who says no.)

- Good: "How often do you read industry news?" (With options from 'Daily' to 'Never'.)

5. Did You Randomize the Answer Order?

This is a simple fix with a huge impact. For any multiple-choice question, randomize the order of the answers. This defends against our natural tendency to pick the first or last thing we see in a list. Most survey platforms have a checkbox for this. Click it.

Actionable Takeaway: Bookmark this page. Before your next survey goes live, grade every question against these five points. It’s a ten-minute exercise that can save you from making critical decisions based on bad data.

Beyond Question Wording to Systemic Data Bias

You can write perfectly neutral questions and still end up with broken data. The most dangerous biases often creep in before a single person sees your survey. Fixing your wording is just table stakes. The real challenge is fixing your methodology.

Sampling Bias: The Echo Chamber You Build Yourself

Sampling bias happens when the group you survey doesn’t accurately represent the group you want to understand. If you only ask your most engaged customers for feedback, you’ll get glowing reviews while missing the insights from users who are quietly churning.

- Real-World Example: A startup announces a new feature to their 50,000 Twitter followers and asks for feedback. The responses are amazing. But the feature flops. Why? Their Twitter audience was early adopters, while their actual customer base was less tech-savvy business owners who found the feature confusing. They didn't survey their customers; they surveyed their fan club.

Actionable Takeaway: Map your target audience before you write a single question. If your customers are 60% enterprise and 40% SMB, your survey respondents must reflect that ratio.

Non-Response Bias: The Sound of Silence

Non-response bias sneaks in when the people who complete your survey are systematically different from those who ignore it. Responders often have the strongest opinions—they either love you or hate you. The silent majority in the middle is lost.

- Real-World Example: An e-commerce store sends a post-purchase survey. The 5% who respond are either five-star fans or one-star ranters angry about shipping. The team fixes logistics, but they miss the 95% who didn't respond—customers who thought the product was just "fine" but were frustrated by a confusing website. Getting a handle on how to analyze qualitative data is critical for spotting these deeper issues. Our guide on how to collect anonymous feedback can also help capture insights from people who would otherwise stay silent.

Actionable Takeaway: Don't just analyze who responded; analyze who didn't. If your response rate is low, the most important story is in the data you're missing.

FAQ: Straight Answers on Biased Questions

What are the main types of biased questions in surveys?

The most common are leading questions (suggesting an answer), loaded questions (containing an assumption), double-barreled questions (asking two things at once), and absolute questions (using words like "always" or "never").

How do you identify a biased survey question?

Look for red flags: emotional or subjective language ("amazing," "frustrating"), hidden assumptions ("Given the problems with X..."), asking two things in one sentence (using "and"), or unbalanced answer choices that lean positive or negative. A good gut check: does one answer feel more "correct" than others?

What is an example of a biased survey?

A classic example is a post-purchase feedback form designed only to get positive testimonials. Questions like, "What was your favorite part of our seamless checkout process?" or "How would you describe our excellent customer service?" are biased because they use leading language and assume a positive experience.

How does question bias affect survey results?

It completely invalidates them. Biased questions don't measure true customer opinion; they manufacture a distorted reality. This leads to skewed data, false confidence, and terrible business decisions like building unwanted features, wasting marketing spend, and ultimately, losing touch with what your customers actually need.

Can survey bias be completely eliminated?

No, but you can reduce it to the point where it's not statistically significant. The goal isn't perfection; it's reliability. By using neutral language, running pilot tests, ensuring a representative sample, and following a pre-launch checklist, you can neutralize the vast majority of biases that lead to bad data.

If you want to stop guessing what customers mean, Backsy’s AI will show you the receipts.