Measuring Customer Satisfaction Is a Waste of Time

A founder's guide to measuring customer satisfaction. Ditch vanity metrics and learn what to track to actually drive growth and avoid churn.

Posted by

Related reading

Customer Advisory Board: The Founder's Playbook for Avoiding Catastrophic Mistakes

A practical guide to building a customer advisory board that provides honest insights, validates your strategy, and prevents costly product mistakes.

Reputation Management Tools: The Secret Weapon Behind Loyal Customers & Higher Revenue

Reputation management tools help you track customer opinions, detect red flags early, and convert negative feedback into retention wins. See how they work & why feedback-driven SaaS like Backsy outperforms survey-only tools.

How to Collect Anonymous User Feedback (With Ready-to-Use Form Example)

Learn how to collect anonymous user feedback with higher honesty and lower bias. Includes examples, templates, and a ready-to-use feedback form you can embed instantly.

Unless you do it right.

Look, you’re busy. You’ve got a product to build, a team to lead, and investors who think MRR is the only word in the English language. So when someone mentions "measuring customer satisfaction," you probably roll your eyes. It sounds like corporate fluff. Another dashboard nobody checks.

Here’s the hard truth nobody admits: you’re probably right. Most companies treat it like a feel-good hobby. They collect smiley faces, generate useless reports, and then go right back to building whatever shiny feature they thought of in the shower.

This isn’t about that. This is about survival. Measuring customer satisfaction isn’t about making customers happy—it’s about finding out why they’re about to leave you before they cancel their subscription. It's an early warning system for your impending irrelevance.

Ignore your customers, and you’ll be lucky to survive the quarter.

Your 'Happy' Customers Are Liars

Let's get one thing straight. You think your customers are satisfied. They gave you a 9/10 on that last survey. Cute. The startup graveyard is filled with founders who died clutching their vanity metrics.

The brutal truth is most of your "satisfied" customers don't give a damn about you. They're just one bad experience, one price hike, or one slightly better competitor away from ghosting you forever. Satisfaction is temporary and cheap. Loyalty is earned in the trenches, fighting off bugs and bad UX, day after day.

The Great Satisfaction Lie

High CSAT scores are the business equivalent of being 'friend-zoned' by your entire user base. They like you enough not to complain, but they’ll never commit. They’ll smile, click ‘satisfied,’ and then cancel their subscription a week later.

Why? Because consumer loyalty is in the toilet. Globally, it’s cratering. While customers might report being satisfied with one transaction, their trust and willingness to stick around are plummeting. You can explore the full breakdown of this loyalty gap if you enjoy horror stories.

Founders see a high score, pat themselves on the back, and completely miss that their customers have zero emotional investment and would churn for a 10% discount.

Takeaway: Satisfaction without loyalty is a vanity metric that predicts nothing but your own cluelessness.

Stop Guessing. Find the Friction.

If you aren't measuring satisfaction with the sole intent of finding what's broken, you're just playing business. You're building a product roadmap based on your team's assumptions—not on the painful reality of the people who pay your bills.

This is the only mindset that works:

- Assume there’s a fire. Somewhere in your UX, something is broken. Your job is to find it before it burns your retention to the ground.

- Feedback is intel, not feelings. Every support ticket and 1-star review isn't a complaint. It's a clue from the front lines telling you where you’re vulnerable.

- Friction kills faster than a lack of features. Nobody ever churned because you didn't have a dark mode. They churned because your password reset is a circle of hell.

Takeaway: Ignore the real voice of your customer and you’ll end up with a beautifully engineered product nobody wants to use.

The Only 3 Metrics That Aren't Total BS

Forget the alphabet soup consultants sell you. For measuring customer satisfaction, you need to care about three things: NPS, CSAT, and CES. Using all three at once is a rookie move that just annoys customers and gets you useless data.

Your goal isn't to collect data points. It’s to get a straight answer to a specific question so you can fix something.

The Big Three, Decoded

Here’s what these actually tell you, with zero jargon.

- Net Promoter Score (NPS): "Would they recommend us to a friend at a loud party?" This measures brand loyalty. It’s a lagging indicator of your reputation.

- Customer Satisfaction (CSAT): "How did we do just now?" This is a transactional snapshot. You use it right after a support chat or a purchase to see if you screwed up that one moment.

- Customer Effort Score (CES): "Was that a pain in the ass?" This measures friction. It asks how hard a customer had to work to get their problem solved. It’s the single best predictor of future loyalty.

Don’t just slap all three into a survey. That’s lazy. A B2B SaaS might live and die by NPS, but a high-volume e-commerce site should be obsessed with CES. Pick the one that answers the question keeping you up at night. Need help? This guide on customer satisfaction measurement methods cuts through the crap.

Takeaway: Pick one metric that aligns with the fire you’re trying to put out right now.

The No-BS Metrics Smackdown

I've seen founders worship these numbers, so let's get real about what they hide.

| Metric | What It Really Asks | Best For... | Biggest Lie It Tells You |

|---|---|---|---|

| NPS | "Are you a fanboy?" | Gauging brand health and word-of-mouth potential. | That a "promoter" will actually promote. They might love you but they’re busy. |

| CSAT | "Did we screw up that one time?" | Instant feedback on specific touchpoints. | That one good interaction means the customer is happy. They’re not. |

| CES | "Was that easy or a total headache?" | Finding and killing the friction that causes churn. | That "easy" means "valuable." An easy-to-use product that solves nothing is still useless. |

No single metric tells the whole story. They’re just gauges on your dashboard. You need to read them together to avoid flying into a mountain.

Takeaway: Numbers tell you that you have a problem; they don't tell you what the problem is.

Tying Metrics to Money

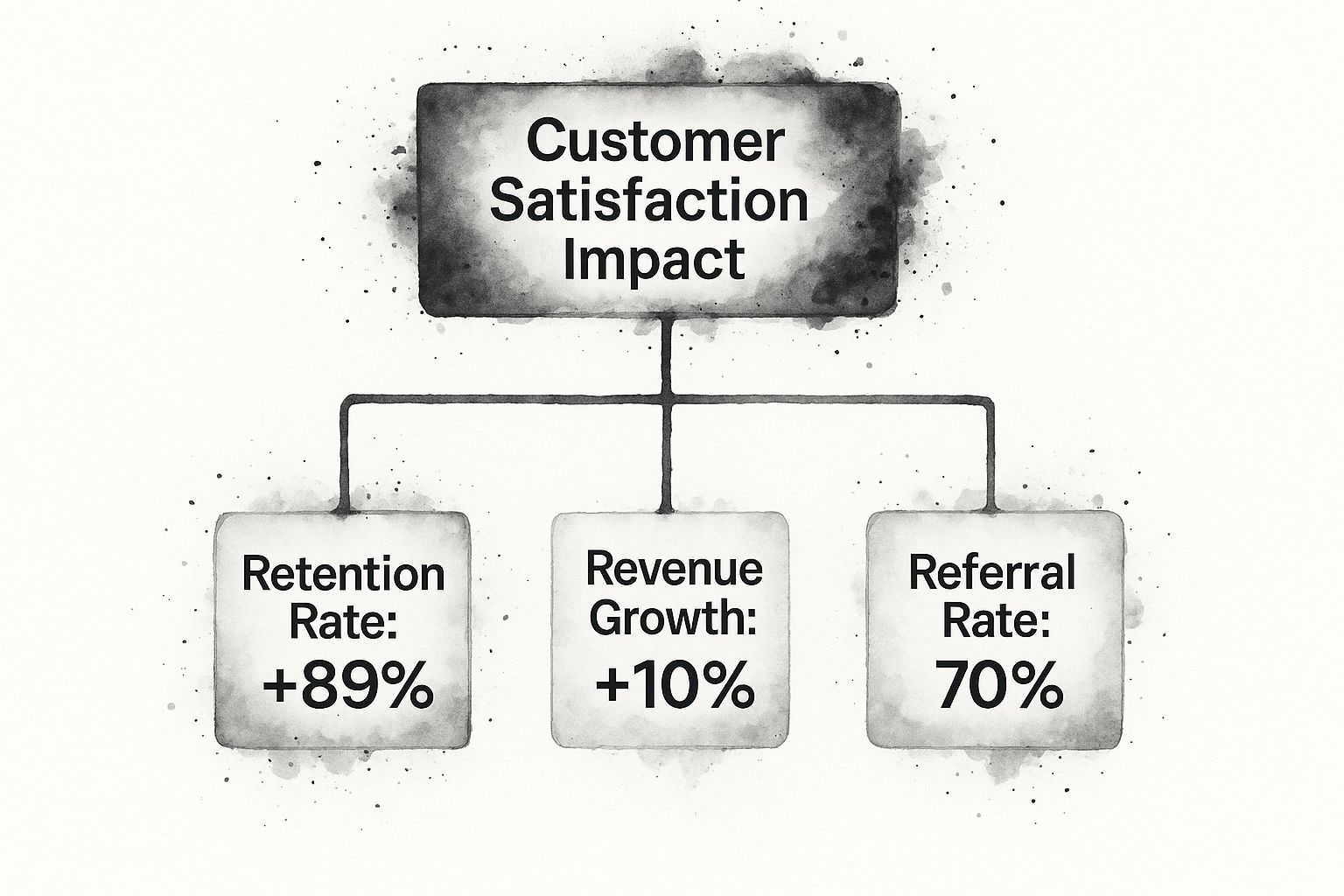

Scores are just numbers until you connect them to cash. Understanding how happy customers impact your bottom line is the only way to get your team to care. Digging into proven strategies to maximize Customer Lifetime Value (CLV) connects satisfaction directly to revenue. It’s not about feelings; it’s about finance.

The data is brutal. Happy customers stay longer, spend more, and act as your cheapest sales team. Unhappy ones leave. Fast. After just two bad experiences, 70% of customers are gone. You don't get many chances.

Takeaway: Your metrics are smoke alarms. To find the fire, you have to read the damn comments.

So you've got your numbers. Great. You know your NPS is 42. So what? That number is useless without the story behind it. Relying only on quantitative data is like flying a plane using only the altitude gauge. You know how high you are, but not that you’re headed straight for a mountain.

The real gold is in the qualitative stuff—the messy, angry, passionate words your customers use in support tickets, reviews, and survey comments. The numbers tell you where to look. The words tell you what to fix.

The Numbers Point to the Fire

Think of your quantitative data as a smoke alarm. Its job is to shriek when something is wrong. A sudden drop in your NPS isn't the problem; it’s a bright red arrow pointing you toward the disaster.

- Quantitative data finds the symptom. It tells you that 30% of users rated their onboarding experience a 2/10.

- Qualitative data diagnoses the illness. It’s the flood of comments saying, “The setup wizard crashed three times and I still can’t connect my account.”

Your metrics tell you which part of the ship is taking on water. The customer's own words tell you how big the hole is and what you need to patch it.

Takeaway: Quantitative data shows you the "what." Qualitative data shows you the "why the hell."

The Words Tell You What to Fix

Once the numbers have pointed you to the dumpster fire, it's time to listen to the people running out of the building. You need to dive into the raw text from:

- Support Tickets: What are the recurring problems driving your customers insane?

- App Store Reviews: Ignore the 1-star rants and 5-star raves. The truth is in the 2- and 3-star reviews.

- Sales Call Notes: What objections stop deals from closing? That's your anti-roadmap.

- Open-Ended Surveys: That "anything else to add?" box is where the real truth lives.

This isn’t about skimming a few comments. It’s about systematically finding patterns. Using advanced sentiment analysis techniques helps you cut through the noise and automatically tag feedback. It turns a mountain of text into a simple, prioritized list of what’s broken.

Takeaway: Stop treating your data analysts and support reps like they live on different planets. Their work is two halves of the same brain.

Build a Goddamn Listening Engine

Right now, your customer feedback is scattered across a dozen different apps. Zendesk tickets, App Store reviews, Twitter DMs. Each one is a tiny scream into the void. A proper system for measuring customer satisfaction isn't about checking these buckets. It's about building a machine that funnels all those screams into a single, coherent signal.

And no, this isn't some six-figure enterprise monstrosity. Think of it as a duct-taped pipeline you build in an afternoon. You're a founder. Be scrappy.

From Digital Noise to Clear Signal

Your first job is to get every piece of feedback into one place. This is non-negotiable.

Here’s the starter-kit version:

- The Plumber: Get Zapier or Make.com.

- The Triggers: Set one up for every feedback source. New G2 review? Zap it. New tagged support ticket? Zap it.

- The Destination: Pipe it all into a dedicated Slack channel or an Airtable base.

Boom. You just broke down the data silos. Now your product team sees the same complaints your support team does. You have a single source of truth for customer pain.

Takeaway: If your feedback lives in more than one place, it might as well not exist.

Don't Drown in Data. Automate or Die.

Okay, you built the pipeline. Congratulations, you now have a firehose of raw text pointed at your face. Manually reading and tagging thousands of comments is a form of self-torture that guarantees you'll miss the biggest trends.

This is where you either give up or get smart.

You built a machine to collect the feedback. Now you need a machine to understand it. Doing this manually is like trying to count grains of sand on a beach.

This is exactly what platforms like Backsy solve. Instead of dumping raw text into a spreadsheet, you pipe it into a system that automatically analyzes sentiment and identifies themes ("login issues," "slow loading," "missing integration"). It turns chaotic complaints into a prioritized hit list.

You stop guessing what the biggest fire is. The dashboard tells you, "47% of negative feedback last month mentioned the checkout process." Now you know what to fix first. Still stuck just trying to get feedback? This guide on how to get customer feedback has no-fluff ways to start.

Takeaway: Your job isn't to read feedback; it's to fix the problems the feedback reveals. Automate the reading part.

Stop Admiring the Problem and Fix It

Alright, you’ve done it. You have dashboards. You have NPS scores. You have a firehose of tagged comments. You presented it all at the all-hands meeting. Everyone nodded and said, “great insights.”

Then they went right back to building the same shit that was on the roadmap last quarter.

Welcome to the “corporate theater of feedback.” It’s where 99% of companies fail. An insight that doesn’t force a change is just an expensive hobby. If your insights die in a PowerPoint deck, you’ve wasted everyone’s time.

Don't believe me? A pathetic 7% of brands actually improved their customer experience last year. The rest either stagnated or got worse. They're all "listening," but nobody is acting. Want the gory details? You can explore the full Forrester report on declining CX scores.

Let's talk about how to actually do something.

"Who, What, When." That's It.

Most feedback dies because it’s vague. "Users are confused by the dashboard" is an observation, not a task. To make it real, you need a brutally simple framework.

- WHAT is the specific problem? Not "onboarding is confusing." It's "Users drop off after the 'connect calendar' step because the permission scope is terrifying."

- WHO owns the fix? Not "the product team." It’s "Sarah, the PM for activation." Give it a name.

- WHEN is the deadline? Not "next quarter." It's "A fix will be shipped in the next sprint, two weeks from today."

This turns a fluffy insight into a Jira ticket with an owner and a due date. It creates accountability. Now it's Sarah's job, and everyone knows it.

Takeaway: If feedback leaves a meeting without a name and a date attached, it’s already dead.

Squeaky Wheels vs. Systemic Failures

Now for the hard part: what to act on? You’ll get a lot of noise. One angry customer demanding a niche feature is a distraction, not a crisis. You have to separate the one-off squeaky wheel from a systemic failure.

Here’s a quick filter:

- Is it a pattern? One person mentions it, you log it. Ten people mention it, you fix it.

- Does it block a critical path? A complaint about a button color is noise. A complaint about the credit card form failing is a five-alarm fire.

- Does it align with your vision? Sometimes, customers ask for a horse when you're building a car. It's okay to say no.

We once had a user complain our onboarding video was too long. We almost ignored it. Then we checked the analytics: 60% drop-off right where the video appeared. That one comment wasn’t a squeaky wheel; it was the tip of the iceberg. We killed the video, built an interactive checklist, and our activation rate jumped 20%.

Closing the loop is the final step. When you fix something, tell the customers who complained. This turns pissed-off users into evangelists. If you need a playbook, here’s one on how to improve customer satisfaction scores.

Takeaway: Insight without action is just expensive corporate art.

Your Roadmap Is a Lie. Fix It with Feedback.

Let's be honest about your product roadmap. It’s mostly a fantasy novel written by your product team, full of heroic features you assume customers want. In reality, it's a collection of biases, shiny objects, and your CEO's latest "great idea."

It’s time for a reality check.

Measuring customer satisfaction isn’t a passive task for the support team. It is the most brutally honest input for your product strategy. Ignore it, and you're just burning cash on features nobody will use.

From Founder Hubris to Market Reality

This isn't about being "customer-led" and building every feature someone tweets at you. That’s how you build a bloated mess. This is about being market-driven. It’s about using the signals of real pain from your users to decide what matters.

You’re looking for the overlap between user problems and your company vision. Prioritize the issues that are actively costing you money—through churn, support tickets, or lost deals—over the "cool idea" someone had last week. After you find insights, the next step is acting on them. This guide on harnessing customer feedback for business growth shows how.

Takeaway: Your customers don’t care about your elegant code. They care if your product solves their problem. Your roadmap must reflect that.

The 30-Day Roadmap Purge

Talk is cheap. Here’s a challenge: for the next 30 days, kill one feature on your roadmap. I’m serious. Just murder it. It’s probably a low-impact bet or a pet project anyway.

In its place, create an initiative that directly fixes the #1 complaint you've found. Don’t overthink it. Find the single biggest point of friction that drives users insane and dedicate a sprint to crushing it.

- Confusing onboarding step causing drop-offs? Fix it.

- Critical report agonizingly slow to load? Speed it up.

- Key integration clunky and unreliable? Make it seamless.

This forces a shift from building imaginary value to solving real pain. It will feel uncomfortable. But the results—less churn, happier users, and a product that actually works—will speak for themselves.

Takeaway: Stop shipping your org chart and start shipping solutions to your customers' biggest problems.

Frequently Asked Questions (The Blunt Version)

No fluff, just answers.

How often can I survey customers without pissing them off?

Wrong question. The right question is, "How often can I ask for feedback that feels relevant and not like a chore?"

- Transactional stuff (CSAT/CES): Ask immediately after an event, like a support ticket closing. It’s a quick tap while the memory is fresh.

- Big picture stuff (NPS): Ask quarterly or semi-annually. Any more than that and you look needy.

The golden rule: Never ask for feedback unless you intend to act on it. When customers see you making changes based on what they say, they'll happily tell you more.

I'm a SaaS startup. What's the one metric I should start with?

Customer Effort Score (CES). Full stop.

Your biggest threat is churn caused by friction. NPS is for later when you have a brand. CSAT is too vague. CES gets straight to the point: "Was that easy or a pain in the ass?" High effort is a direct predictor of churn. Your first job is to make your product less annoying to use.

What if feedback contradicts my genius product vision?

Good. If feedback always confirmed your vision, you'd be building in an echo chamber. Your job isn't to be a short-order cook, building whatever customers demand. You're a doctor. You listen to the symptoms (feedback), then use your expertise to make a diagnosis (the product decision).

When feedback clashes with your vision, ask one question: "Is this a real problem for my ideal customer, or is this a feature request from someone we shouldn't be serving anyway?"

If it's the first, your vision needs a dose of reality. If it's the second, it’s not just okay to say no—it’s your job.

Stop guessing what your customers think and let Backsy analyze all your feedback so you can stop running reports and start fixing what’s broken.