Your Survey Is a Lie. Here's How to Ask Questions That Get the Truth.

Stop wasting time. Learn the only 8 types of questions to ask in a survey to get feedback that actually grows your startup. Founder-approved, no fluff.

Posted by

Related reading

10 Voice of Customer Examples You Can Actually Use

Stop guessing. See 10 real voice of customer examples from surveys, social media, and support tickets to find out what your customers actually want.

A Founder's Guide to Analyzing Survey Data

A battle-tested approach to analyzing survey data. Learn how to turn raw feedback into actionable insights that drive real business decisions.

Customer Advisory Board: The Founder's Playbook for Avoiding Catastrophic Mistakes

A practical guide to building a customer advisory board that provides honest insights, validates your strategy, and prevents costly product mistakes.

Your current survey is a masterpiece of self-deception. You’ve built a beautiful, color-coordinated form that asks customers if they 'like' your product. They tick 'yes' because they’re nice people, and you get a dopamine hit from a 98% satisfaction score. Then your churn rate climbs, and you wonder why.

Stop building vanity metrics and start building a business. Most Voice of Customer (VoC) analysis is just corporate role-playing. You're not a corporate drone with a budget to burn on useless reports. You're a founder, and every piece of feedback is a bullet you can either dodge or take to the chest. The difference is asking the right questions to ask in a survey.

Ignore your customers, and you’ll be lucky to survive the quarter. The goal isn't to feel good about your product; it's to get good. That means surgically extracting the truth, even when it’s ugly. Forget the generic templates filled with polite, useless questions. They are designed to create feel-good charts, not actionable intelligence. This list is different. It’s a weapon.

Let's cut the crap and dive into the questions that actually matter.

1. Multiple Choice Questions

Multiple choice questions are the survey equivalent of a bouncer at a club. They force people into a category, fast. You get clean, quantifiable data you can slap on a chart, not a rambling, unusable paragraph from a user who thinks they’re your co-founder. It's the fastest way to segment your audience and see trends without a PhD in text analysis.

Netflix doesn't ask "What kind of shows do you feel like watching?" That's a therapist's question. They serve up genres and make you pick. That data feeds the algorithm directly. No ambiguity, no feelings, just inputs. That's your model.

When to Use Multiple Choice Questions

Use these when you already know the likely answers and just need to know the distribution. Think demographics, frequency, or a forced choice between known options.

- Demographics: Age ranges, income brackets.

- Behavioral Frequency: "How often do you use our product?" (Daily, Weekly, Monthly)

- Feature Prioritization: "Which of these features is most important to you?" (List of features)

Actionable Tips for Implementation

Don't screw up the basics.

- Make Choices Mutually Exclusive: Don't use age ranges like "20-30" and "30-40." Someone who is 30 fits in both. It's sloppy and your data will be garbage.

- Aim for 4-7 Choices: Too few options force bad fits. Too many cause decision paralysis.

- Randomize Answer Order: People are lazy. They'll pick the first option. Shuffle the order to fight this bias. It's a single checkbox in most survey tools. Click it.

The takeaway: Use multiple choice to find out what people are doing, not why.

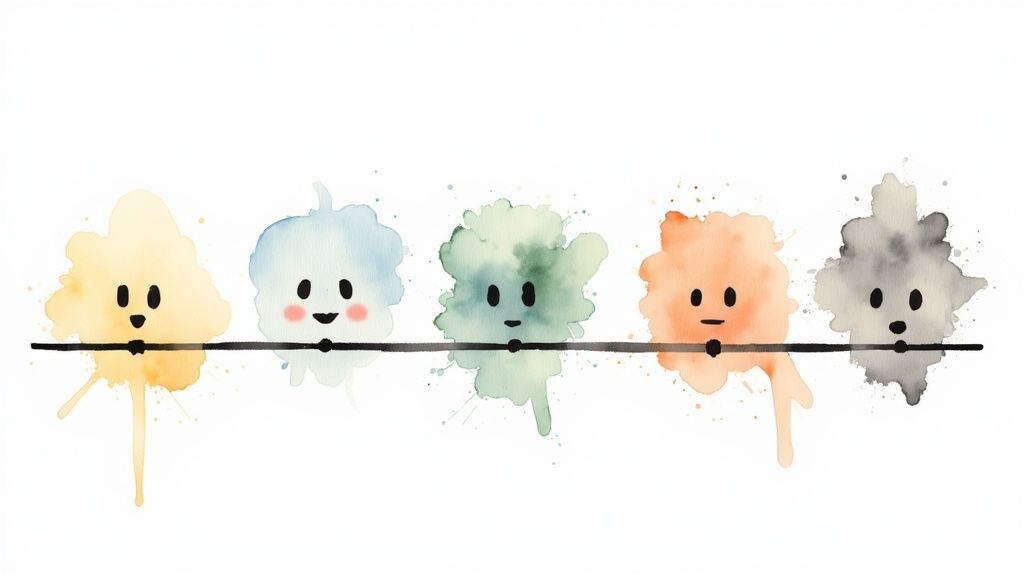

2. Likert Scale Questions

Likert scales are for measuring the intensity of an emotion. They turn vague feelings like "satisfaction" into a number you can track. Instead of a useless "yes/no," you get a score on a 1-to-5 scale that tells you if a customer is "meh" or "ready to riot." This is how you stop guessing and start measuring sentiment.

Google doesn't ask its engineers, "Are you happy at work?" They ask them to rate statements like, "I see myself still working here in two years" on a 5-point scale. That nuance separates the loyalists from the flight risks. It turns subjective experience into operational data.

When to Use Likert Scale Questions

Use this when you need to gauge agreement, satisfaction, frequency, or importance. If the answer isn't a simple binary choice, the Likert scale provides the structure to measure it. It's the best way to benchmark sentiment over time and see if your efforts are actually moving the needle.

- Customer Satisfaction: "How satisfied were you with your recent support experience?" (Very Unsatisfied to Very Satisfied)

- Employee Engagement: "I feel valued by my team." (Strongly Disagree to Strongly Agree)

- Product Feedback: "The new user interface is easy to navigate." (Strongly Disagree to Strongly Agree)

Actionable Tips for Implementation

Sloppy scales produce garbage data.

- Keep Labels Consistent: Don’t mix "Strongly Agree" with "Extremely Satisfied" in the same survey. Pick a scale and stick with it.

- One Idea Per Question: Don't ask, "Was our support team fast and helpful?" They could be fast but useless. Split it into two questions.

- Use Reverse-Scored Items Sparingly: A negatively phrased question can check if people are paying attention, but overdo it and you'll just confuse them.

The takeaway: Likert scales turn fuzzy feelings into hard numbers you can actually manage.

3. Open-Ended Questions

Open-ended questions are your secret weapon for finding out what you don't know you don't know. You stop forcing people into your preconceived boxes and give them a blank text field. This is where you find the raw, unfiltered truth—the frustrations, the brilliant ideas, and the "why" behind your other data. It’s messy, but the insights are often pure gold.

After a stay, Airbnb doesn't just ask you to rate cleanliness on a scale of 1-5. They ask, "What could your host do to improve?" A 3-star rating is a signal; the text explaining why it's a 3-star rating is the roadmap to fixing it.

When to Use Open-Ended Questions

Use these when you need to explore a topic, understand the reasons behind a score, or capture ideas you hadn't thought of. If you want to move beyond the what and dig into the why and how, open-ended is the only way to go.

- Exploring "Why": After a low NPS score, ask, "What was the primary reason for your score?"

- Idea Generation: "What is one feature we could build that would make our product indispensable to you?"

- Capturing Specifics: "Please describe the issue you encountered in as much detail as possible."

Actionable Tips for Implementation

Getting value requires more than just asking.

- Use Them Sparingly: Your user's time is not free. One or two well-placed questions are more effective than five lazy ones.

- Be Incredibly Specific: Don't ask, "Any feedback?" Ask, "What is the #1 thing we could do to improve your experience with our checkout process?"

- Place Them Strategically: Ask them at the end of a survey, after you've primed the respondent with more structured questions.

The takeaway: Open-ended questions uncover the problems and opportunities your team is blind to.

4. Rating Scale Questions

Rating scales turn subjective opinions into objective data points you can chart. It's the engine behind the Net Promoter Score (NPS), famously used by Apple to gauge loyalty with a single "How likely are you to recommend us?" question on a 0-10 scale. It’s also what powers the entire review economy. You can’t argue with a 2.3-star average. It’s a brutal, public metric of your value.

This is your go-to when you need a hard number to slap on a KPI dashboard and track over time. It’s the difference between saying "some customers are unhappy" and "our average satisfaction score dropped from 4.2 to 3.8 last quarter." One is an observation; the other is a crisis.

When to Use Rating Scale Questions

Use rating scales when you need to benchmark performance, track changes over time, or compare different things against each other.

- Customer Satisfaction (CSAT): "How satisfied were you with our customer support?" (Scale of 1-5)

- Likelihood to Act: "How likely are you to purchase from us again in the next 3 months?" (Scale of 1-10)

- Feature Importance: "Please rate the importance of the following features." (Each rated 1-5)

Actionable Tips for Implementation

The scale you choose directly impacts the data you get.

- Choose the Right Scale Length: A 1-5 scale is simple. A 0-10 scale provides more granularity for things like loyalty. Decide if you need nuance or a quick read.

- Use Clear Anchor Labels: Don't just use numbers. Label the endpoints (e.g., "Not at all Satisfied" and "Extremely Satisfied"). Ambiguity kills data quality.

- Keep Your Scale Consistent: If 5 is positive for one question, don't switch to 1 being positive on the next. This basic mistake will invalidate your results.

- Leverage Visuals: Stars or smiley faces are processed faster than text. It’s a simple way to boost completion rates, especially on mobile.

The takeaway: Rating scales translate subjective experience into a number you can track, manage, and improve.

5. Yes/No (Dichotomous) Questions

Yes/No questions are a meat cleaver. They offer just two answers, forcing a definitive choice and eliminating any gray area. Use them when you need an unambiguous, binary signal. There's no room for interpretation, just a clean data point.

When you unsubscribe from an email list, they ask, "Are you sure you want to unsubscribe?" with a simple "Yes" or "No." They need a clear directive, not your life story. It’s a gate, not a conversation.

When to Use Yes/No Questions

Use this for screening, qualification, or gathering facts where only two outcomes exist. They're perfect for the start of a survey to route people down different paths or to confirm a behavior before diving deeper.

- Screening Participants: "Are you the primary decision-maker for software purchases at your company?"

- Confirming Actions: "Did you use our mobile app today?"

- Gauging Agreement: "Do you agree to the terms and conditions outlined above?"

Actionable Tips for Implementation

Their simplicity is deceptive.

- Avoid Subjectivity: "Are you satisfied with our service?" is a weak Yes/No question because satisfaction is a spectrum. Use a Likert scale for that. Stick to objective facts.

- Ensure it's Truly Binary: "Do you enjoy our new features and improved UI?" is a disaster. A user might like one but not the other. Split it.

- Offer an Escape Hatch: Sometimes people genuinely don't know. Adding a "Don't know" option prevents them from guessing and corrupting your data.

The takeaway: Use Yes/No questions for filtering and facts, not for feelings.

6. Rank Order Questions

Rank order questions are your defense against lazy consensus. Instead of asking if five features are all "important" (they always are), you force respondents to decide which one is most important. This reveals the hierarchy of what truly matters to them. It cuts through the polite "yeses" and gives you a clear priority list.

A product team doesn't ask, "Do you want a better calendar integration?" and "Do you want AI summaries?" They'd get a "yes" to both. Instead, they present a list and make users rank them. This tells them precisely where to allocate engineering resources for maximum impact.

When to Use Rank Order Questions

Use this when you need to understand relative importance and force trade-offs. It's for situations where everything seems like a priority, and you need to see what rises to the top when users have to choose.

- Feature Prioritization: "Rank the following features from most to least important for your workflow."

- Brand Perception: "Please rank these brands based on your perception of their quality."

- Customer Needs Analysis: "Rank the following service attributes in order of importance to you." (e.g., Price, Speed, Customer Support)

Actionable Tips for Implementation

The design of the question matters.

- Limit the List to 3-7 Items: Asking someone to rank 15 things is torture. They'll get tired and give you random answers. Keep it short.

- Use a Drag-and-Drop Interface: It’s intuitive and far less tedious than assigning numbers to each item. This is standard now.

- Provide Crystal-Clear Instructions: State the criteria. "Please drag and drop the items below, placing your most preferred option at the top."

- Ensure Comparability: Don't ask people to rank "Price," "Our Mobile App," and "Free Shipping." It's a confusing apples-to-oranges comparison.

The takeaway: Ranking forces a trade-off, revealing what customers value most when they can’t have everything.

7. Matrix Questions

Matrix questions are a survey assembly line. They bundle multiple related questions into a single grid, using the same scale. Instead of asking five separate questions about your support team, you present one grid to rate speed, knowledge, and friendliness from "Very Dissatisfied" to "Very Satisfied." It's efficient for you and the user.

A hotel presents a single matrix to rate the check-in, room cleanliness, restaurant, and pool. This gives them a clean, at-a-glance dashboard of what's working and what's on fire across their entire operation. It's built for comparative analysis at scale.

When to Use Matrix Questions

Use this when you need to measure sentiment across a set of related attributes. If you want to see how different parts of a single experience stack up against each other, the matrix is your most powerful tool.

- Employee Satisfaction: Rating work-life balance, management communication, and compensation.

- Product Feature Feedback: "How satisfied are you with the following features?" (List of features vs. satisfaction scale).

- Brand Perception: Evaluating your brand against attributes like "Innovative," "Trustworthy," and "High-Quality."

Actionable Tips for Implementation

A bad matrix will tank your completion rate.

- Keep Rows to a Minimum: Anything more than 5-7 rows is a cognitive burden. If you have more, split them into multiple grids.

- Ensure Scale Consistency: The scale must logically apply to every single item. Don't mix apples and oranges in the rows.

- Randomize Row Order: People get lazy and click down one column ("straight-lining"). Randomizing the row order helps mitigate this.

- Test on Mobile: A matrix that looks great on desktop can be a nightmare on a phone. Endless horizontal scrolling is a guaranteed way to lose respondents. Test it.

The takeaway: Matrices are brutally efficient for comparing related items but will destroy your survey if designed poorly.

8. Open-Ended Questions (Repeated for Emphasis)

Yes, this is here twice. Because you're still not taking it seriously enough. Open-ended questions are the "why" behind all the numbers you just collected. This is where you uncover the problems, ideas, and frustrations you never even thought to ask about. It’s messy, it’s qualitative, but the most valuable insights live here.

Superhuman didn't ask users to rate features. They asked, "How would you feel if you could no longer use Superhuman?" then focused on the "very disappointed" segment, digging into their open-ended responses to find the core value. This wasn't about stats; it was about understanding passionate user stories to build a product people couldn't live without.

When to Use Open-Ended Questions

Use them when you need context, depth, and unexpected insights. If you’re at the beginning of a product cycle or trying to understand a sudden drop in satisfaction, this is your tool. Stop guessing and start listening.

- Discovering Unknowns: "Is there anything else you'd like us to know?" This simple question can reveal major pain points.

- Post-Interaction Feedback: "What was the primary reason for your score today?" after a CSAT or NPS rating.

- Idea Generation: "If you could change one thing about our service, what would it be?"

Actionable Tips for Implementation

You're not collecting data for a spreadsheet; you're collecting intelligence.

- Ask One Thing at a Time: Don't ask, "How did you find our pricing and customer support?" Split it into two questions to get clean answers.

- Place Them Strategically: Don't start a survey with a heavy-lifting open-ended question. Put one at the end to catch final thoughts.

- Use AI for Analysis: Manually reading hundreds of responses is a founder's nightmare. Use tools that leverage AI to automatically tag themes like "pricing," "bug," or "feature request." This turns a wall of text into actionable insights.

The takeaway: All your quantitative data tells you what is happening; open-ended questions tell you why it matters.

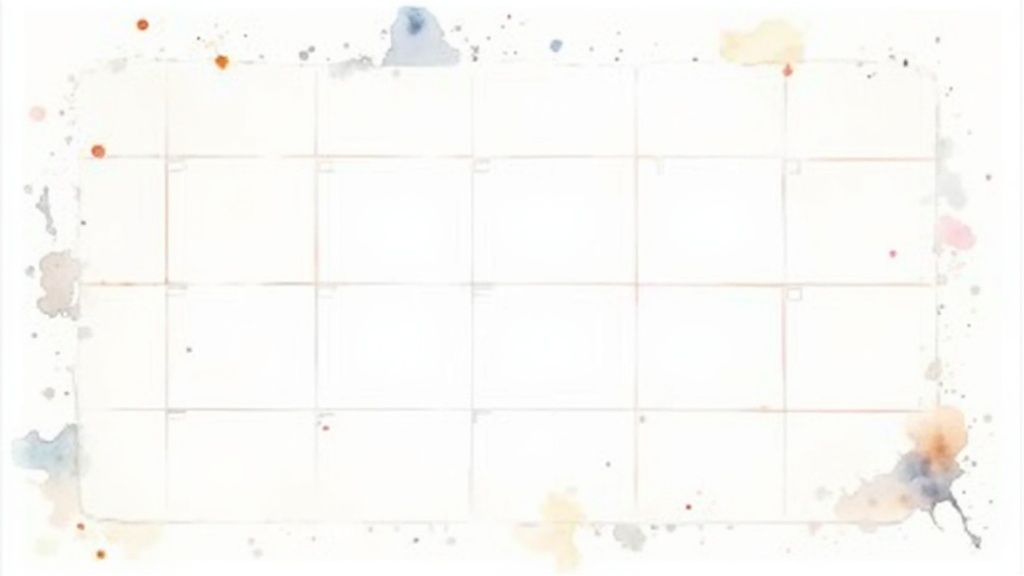

Survey Question Types Comparison Table

| Question Type | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Multiple Choice Questions | Low; predefined options | Low; simple to design and analyze | Quantifiable, easy statistical analysis | Broad surveys, quick data collection, preference polling | Fast to answer, consistent, reduces response bias |

| Likert Scale Questions | Moderate; requires scale design | Moderate; careful labeling needed | Nuanced attitudinal data, trend analysis | Attitude measurement, employee/customer satisfaction | Captures subtle opinions, familiar format |

| Open-Ended Questions | High; open text input | High; requires qualitative analysis | Rich, detailed qualitative insights | In-depth feedback, exploratory research | Reveals unexpected themes, detailed responses |

| Rating Scale Questions | Low to moderate; numeric scale setup | Low to moderate; anchor labeling | Quantifiable evaluation data | Satisfaction ratings, benchmarking, product evaluation | Intuitive, flexible scale lengths |

| Yes/No (Dichotomous) Questions | Very low; two options only | Very low; simplest design | Binary data | Screening, factual questions, quick filters | Highest completion, easy analysis, decisive responses |

| Rank Order Questions | Moderate to high; ranking interface | Moderate; requires complex analysis | Ordinal data showing preferences hierarchy | Priority ranking, feature importance, preference studies | Forces prioritization, richer than simple choices |

| Matrix Questions | Moderate to high; grid layout | Moderate; consistent scale usage | Consistent data across multiple items | Multiple attribute evaluations, space-efficient surveys | Efficient layout, easy comparisons, reduces length perception |

Your Next Move Is Obvious

You now know the difference between a question that validates a bias and one that uncovers truth. The gap between the two is the gap between a zombie startup stumbling towards irrelevance and a company that seems to read its customers' minds.

Most founders treat feedback like a checkbox. They send a sloppy survey, brag about a 15% response rate, and let the data rot in a spreadsheet while they build features nobody asked for. Don't be that founder. Stop treating feedback like a chore and start treating it like the highest-leverage activity you can do.

From Questions to Conviction

The real art isn't just knowing the types of questions to ask in a survey; it's about sequencing them. You're not collecting data points; you're conducting an interrogation to find the truth.

Here’s the blunt reality:

- A Yes/No question tells you if a problem exists.

- A Rating Scale tells you the magnitude of that problem.

- An Open-Ended question tells you the emotional context and how to fix it.

Used alone, each is a whisper. Used together, they're a bullhorn telling you exactly where to focus. You're no longer guessing. You're operating with conviction.

The Real Work Begins After "Submit"

Here's the hard truth: asking the right questions is only half the battle. The other half is turning messy responses into product decisions. Drowning in thousands of open-ended text responses is a special kind of hell reserved for well-intentioned founders.

Manually tagging feedback is a fool's errand. You'll burn hours and miss patterns a machine can spot in minutes. Understanding how AI improves customer feedback integration isn't a "nice-to-have" anymore. It's the difference between acting on insight this week versus this quarter, if ever. The speed at which you translate customer language into code dictates your survival.

Your choice is simple. Keep shipping features based on gut feelings and the opinion of the loudest person in the room, or use this arsenal to build a direct pipeline to your customers' brains. One path leads to a slow death. The other leads to a product people can't live without. Your call.

Stop drowning in spreadsheets and let Backsy.ai synthesize your survey responses, support tickets, and reviews into a single source of truth so you can stop guessing and start building what customers will actually pay for.